Korean | English

Target readers: I initially aimed to create a guide suitable for complete beginners, but there are sections that require some prior knowledge. It seems more appropriate for those who have started studying Haskell and still feel uneasy after exploring topics like Monad and Arrow.

I work (or aim to work) professionally with functional programming (hereafter referred to as FP), so you could call me a profesional. However, studying the foundations of FP is more like a hobby (closer to being an amateur). While having a deep understanding of the foundational knowledge isn’t strictly necessary to work with FP, my personal curiosity drives me to explore, imagine, and organize these ideas as I dig into various sources.

Since I have few peers to discuss these topics with, I lack reliable means to verify whether my understanding is correct. Please note that the knowledge presented here is based on my imaginings and not formally validated, so take it with caution.

This post begins with pure functions and explores the terms and concepts necessary for getting started with functional programming.

The starting point of challenges when studying functional programming is pure functions.

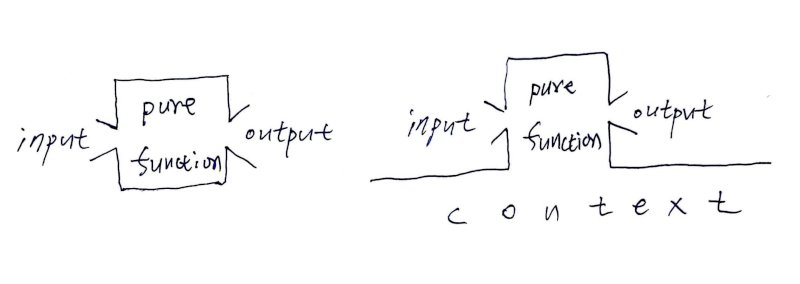

In FP, a function always produces the same output for the same input. Whether you rut it today, tomorrow, or multiple times, the result will not change. It does not rely on global variables or external states, depending solely on its input. As long as the input is known, the output can be determined. This property is known as referential transparency.

※ Although not a complete academic definition, any element that influences the behavior of a function but is not explicitly defined in its input or output is often referred to as an Effect.

Such restrictions may feel overly strict, making one wonder if programming within these constraints is even feasible. How can practical programs be created without relying on Effects? Real-world applications often involve IO operations that are indispensable. To build useful programs, Effects are necessary. (Of course, some libraries designed for use as part of a program may lack Effects, but if the program’s results need to be observed, a completed program without Effects is ultimately impossible.)

Many textbooks and promotional materials claim that functional programming does not include Effects. But since practical programs inherently depend on Effects (especially for IO), what does this really mean? Rather than aiming for a design entirely without Effects, the goal is to effectively separate them.

This means handling as much logic as possible in a “pure” way, even if it’s not entirely free of Effects, and delegating the well-isolated impure parts to the runtime system. Programmers focus on the pure logic without needing to directly deal with the impure sections. Thus, from their perspective, functional programming can still be described as “pure.”

This separation approach aligns with concepts like monads in Haskell, where impure computations are encapsulated and managed explicitly, enabling programmers to reason about their code as though it were purely functional.

Why follow referential transparency? What good comes from establishing such a strict foundation from the start?

※ In FP, a function without Effects is called a pure function, while a function with Effects is often referred to as an action or, sometimes, a procedure instead of a function. This distinction highlights the difference between functions that only rely on their inputs to produce outputs and those that interact with external systems (such as performing IO operations or modifying state).

I will show a simple procedural pseudocode.

funcPlus v

return v + v * global

funcMinus v

return v - v * global

main

global = 1.1

init = 1

res = funcPlus init

res = funcMinus res

print res In funcPlus and funcMinus, the necessary information is not passed as parameters, but instead is stored in and accessed via a global variable. These functions depend on the global state, which is not provided as input. This means that they are not referentially transparent, not pure functions, they have effects, and they are considered actions.

In FP, global variables are not allowed. All necessary information must be passed as parameters to functions.

funcPlus gparam v

return v + v * gparam

funcMinus gparam v

return v - v * gparam

main

global = 1.1

init = 0

res = funcPlus global init

res = funcMinus global res

print res In FP, there is no concept of variables that can store values through assignment. Unlike imperative programming, where you can assign a value to a variable like res, functional programming avoids mutating state. Instead, values are passed through functions as parameters and returned as results. Therefore, you cannot “remember” a value by assigning it to a variable; each computation is based solely on its inputs and produces outputs without altering any external state.

main

print (funcMinus 1.1 (funcPlus 1.1 0))As in the example above, when one function finishes, its result must be immediately passed to the next function.

Performing an operation on data,

passing the modified data to the next function for further processing,

and so on may seem like a series of sequential steps.

However, if you consider the scenario before any initial value is provided - when the value does not yet exist - it reveals…

\init global -> print (funcMinus global (funcPlus global init))If we write it in a way that clearly shows the empty state of the information, it looks like this:

\_ _ -> print (funcMinus _ (funcPlus _ _))model it using only pure functions.

In a Turing machine, there is a concept of state, but in the lambda calculus, there’s no place to hold a state. Sho how can the lambda calculus do everything a Turing machine can? It seems extremely cumbersome, but as shown in the example above, everything can be passed as parameters. However, it’s obvious that programming by passing global every single time is quite tedious. As the amount of dependent information increases, while theoritically possible, it becomes almost impractical in reality.

Not “lambda calculus”, but in programming, there are sometimes documents that refer to lambda functions simply as “anonymous functions” without further explanation. Since they don’t have a name, it’s easy to assume that they can’t be called elsewhere in the program. However, looking at their usage, there are important characteristics that need to be highlighted.

You can make it so that when x is received, it returns a function instead of a value, like this.

\x -> (\y -> doSomething x y)When you provide x, the function \y->..., which returns a function, clearly references x, even though x wasn’t directly passed as an input. This might seem like it’s not a pure function, because it looks like the function is accessing information not supplied as input. However the function \y->... is pure in a certain sense. Once x is passed in, the x within the function is a captured constant. it seems like it’s accessing external information, but the x inside the function is actually a value that has already been fixed and bound to the context. This is done without modifying the function’s body; the value of x is captured in the context, and when needed, it’s retrieved from there. This combination of a context and a function that references it is called closure. Inside the \y->..., x is considered a free variable because it it not bound within the lambda’s head.

While this behavior may seem impure, it’s a hint for solving impure real-world tasks while still keeping the core functionalty pure!

In lamb calculus, a lambda function without free variable is called a combinator. When reading Haskell textbooks, it’s more convenient to think of a combinator as a function that allows you to combine functions or values of a certain type. This make sense because, when a function has no free variables, it it independent of any context and can bd seen as a “combinable” element. In this sense, it essentially carries the same meaning.

※ Please refer to Combinatory logic

\v gparam -> v + v * gparam -- (A)

\v gparam -> v - v * gparam -- (B)We will define the two functions and then define a combinator to combine them as follows.

\f g -> \v -> g (f v) -- it's the familiar concept of function composition.※ If we can combine f and g using a combinator like the one above, then f and g are also called combinators.

If we define it like this, there’s no way to pass gparam, so we need to make sure that both g and f receive gparam as an argment.

\f g -> \v -> g gparam (f gparam v)Now, if we place the free variable gparam in the lambda head,

\gparam -> \f g -> \v -> g gparam (f gparam v)Doen’t \gparam seem similar to the role of a global variable? The functions f and g below \gparam-> have access to gparam. Once we provide a value for gparam, it allows us to combine the two function, and it returns a \f g->... function that is ready to apply gparam to each of the functions. By passing (A) and (B) into this function, we can achieve the desired behavior. By defining a new combinator that can combine functions with gparam, we can now combine(compose) these functions.

Although we’ve managed to achieve the desired behavior while maintaining referential transparency without using global variables, it’s easy to predict that as global informations continues to grow, it will become increasingly difficult to maintain this approach.

Now, even though we still need to pass gparam, let’s try to make it so that we don’t have to worry about it anymore.

They may seem similar, but they are actually different. Passing some arguments to a function while keeping others fixed is called Partial application, while Currying refers to a higher order function that takes one argument at a time and returns a function that accepts the remaining arguments.

\x y -> x + yif we apply currying to the above,

\x -> (y -> x + y)To obtain the function \y -> x + y, you need to provide a value for x by passing through \x. If you have this function in your hands, it means the function “knows” the value of x. This implies that the value of x is present in the context.

By shifting the perspective, let’s change funcPlus and funcMinus so that instead of returning a value, they become functions that take gparam as an argument and return value. In other words, they will perform all possible operations before receiving gparam, and only when gparam is provided will they return the final value. This will ensure that the returned entity is a function that becomes a value once gparam is received.

\v -> \gparam -> v + v * gparam -- (C)

\v -> \gparam -> v - v * gparam -- (D)Even if we insert v, it won’t immediately produce a value by reducing and returning it. instead, it will return a function that becomes a value only once gparam is provided.

Now, let’s define a combinator to combine(compose) these two.

\f g -> (\initV -> (\gparam -> g ((f initV) gparam) gparam))It may seem a bit complicated, but we are applying gparam to the result of f, passing it to g, and then applying gparam again to the function created in this way. This is alomost the same as the combinator we defined earlier, but with the following differences.

-- \gparam -> (\f g -> (\initV -> g gparam (f gparam initV)))

\f g -> (\initV -> (\gparam -> g (f initV gparam) gparam)) -- (E)It’s essentially just a rearrangement of the order in which the arguments are received, and the result is the same. if we have f, g, and initV, we still need to receive gparam at the end in order to produce the final value.

Types serve as a “language” for communicating with the compiler. To delev into type-level programming, you need to understand some quite complex properties. Of course, we’re not aiming for a extensive (formal) explanation of types here. Instead, we’ll focus on one paricular property that is often not discussed.

Types can be seen as one way of marking tasks that will be done later(I haven’t seen any documents that discuss this in the same way; it’s just my own imagination). They indicate that some work needs to be done, but for now, you just give it a name and move on. When the time comes, the task can be executed.

initV, the functions (C) and (D) will become functions that return a value only after receiving gparam.(E) combinator,gparam to produce a value.In haskell, it can be expressed as follows:

data RequireG a = { runner :: gparam -> a }For now, let’s assume we’ve named the type that requires gparam to produce a value as RequireG. if we ever need the value, we can simply run a runner that provides gparam.

f :: RequireG a

g :: RequireG a

(마) :: RequireG a -> RequireG a -> RequireG aCombination! Even if there are tens or hundreds of RequireG functions, you can compose all of them using (E). Beneath the surface, there might be an enormous number of processes, but from above the surface, it all appears as just a single function of type RequireG. You can use this combination of RequireG typed functions as a single value, and when the final value is needed, simply provide the global-like value to the runner.

The assembled whole is pure,

but from the perspective of certain pieces, it may appear impure.

Now, it seems we’re ready to dive into the final topic. so far, we’ve briefly introduced the terminology needed to discuss this part. Next, we’ll explore how to handle the results of individual functions as if they were state while composing these functions.

In FP, where there is no state, a mechanism to replace state is essential. Many of the topics in Haskell that are notorisously difficult for beginners are often tied to this concepts. What we need is a way to “effectively model” impure tasks using pure functions.

funcPlus gparam v

return v + v * gparam

funcMinus gparam v

return v - v * gparam

main

global = 1.1

init = 0

res1 = funcPlus global init -- f1

res2 = funcMinus global res1 -- f2

res3 = res1 + res2 -- Each function's result is accessed separately.

print res3 Let’s look at cases where the individual results of the previously composed functions, like in the res3 part, are needed. if this isn’t resolved, building practical programs becomes very challenging.

### How to use closures in lambda functions

In FP, it’s often said that there are no variables, but if we take a slightly different perspective, that’s not entirely true. There is one way to handle it: simply bind them in the lambda head.

\init global -> print (funcMinus global (funcPlus global init))Let’s take the pattern where values were immediately passed to the next function and instead bind the results of each function in the lambda head.

\init -> (\res1 -> (\res2 -> (print res1 + res2)) (funcMinus global res1)) (funcPlus global init))Let’s simplify the example by renaming the functions to f1 and f2 and capture their results in the lambda head.

-- ___________________________________________

\init -> (\res1 -> (\res2 -> res1 + res2) (f2 res1)) (f1 init)

-- ^^^^^^^^^^^^^^^^^^^^^^

-- Please note that you can access res1 here.To make it clearer, we can define a combinator that applies a function to a value, makeing the code more readable and simplifying the function composition.

(>>>>>) v f = f vYou can use an infix operator to place an operation between two arguments. it can be written a v >>>>> f.

\init -> (f1 init) >>>>> (\res1 -> (f2 res1) >>>>> (\res2 -> res1 + res2))If the precedence of the (>>>>>) operation is set to right-associative, you can omit the parentheses as shown below.

\init -> (f1 init) >>>>> \res1 -> (f2 res1) >>>>> (\res2 -> res1 + res2)If function application has a higher precedence than >>>>>, (the end of the lambda function definition corresponds to the end of the expression.)

\init -> f1 init >>>>> \res1 -> f2 res1 >>>>> \res2 -> res1 + res2Haskell defines do as syntactic sugar. Similary, we can define a syntactic sugar for >>>>> and \res ->, called dolike, to make the code look like the exmple below.

job init = dolike

res1 <- f1 init

res2 <- f2 res1

return res1 + res2We’ve now created a combination that allows us to access the results of each funtion separately, which is both conveninet and readable.

This approach is similar to the way monad binds work, where each action’s individual results are stored. It’s important to clarify that we’re discussing how the monad’s bind operation stores these results, not the purpose of the monad itself, which is to handle effects. Here, we’re only focusing on one aspect of handling effects.

The Haskell do notation, including the use of monads, was introduced by Philip Wadler. It’s impressive how he was able to design such a system that feels so familiar and natural. The way it all fits together is truly remarkable.

(※ The person who first introduced the concept of monads in programming theory was Eugenio Moggi.)

@todo I am trying to demonstrate how to combine actions that take gparam(with effects) by applying all the concepts we’ve discussed above, but I haven’t fully organized my thoughts yet. (November 2024)

You can design a more explicit combinator that carries data around, rather than relying on closures.

Let’s consider a case where we don’t know how many functions we need to combine, so we also don’t know how many result values need to be remembered. What’s good way to represent this uncertain quantity of date?

The first thing that comes to mind is the recursive data type, list

data List a = Null | Cons a (List a)While a recursive data type like a list seems like the most straightforward solution (and it can work), there’s an even simpler type available.

Let’s look at 2-tuples. While a tuple itself is not a recursive data type, you can create a nested structure by placing tuples inside each othter. (Of course, it doen’t have to be tuples; any structure that allows nesting will work. However, among the existing structures, tuples and one of simplest.)

If you want to maintain the results of both f a and the subsequent g a when given a as input,

1. you can represent this with a 2-tuple (a, a).

1. First, apply f to the first element: (f a, a)

1. Then, apply g to the second element: (f a, g a)

1. If h follows, you can extend it like this: (f a, (g a, h a)).

This approach is similar to the one used by Arrows.

Function combination with Arrow

The goal here is not to academically understand the solutions found by many brilliant minds, but to develop the ability to recognize and appreciate them by reading practical Haskell code.

In this section, we did not aim to explore Monads or Arrows in their entirety, but instead looked at how they address the problems arising from pure functions. This perspective helped me understand why combinators are designed the way they are. When encountering complex combinators that are hard to grasp, it might be helpful to look for parts of the code that behave similarly to the examples dicussed above, and approach them this way.

Although I have not yet reached my final destination, I am opening this up early. There isn’t an article that specifically organizes this kind of content, so I hope to spark conversations with those who are interested to see where I might be wrong. I firmly believe that there is a smoother path for engineers who have only dealt with imperative programming, and I am navigation it on my own for now.